An architecture that allows Point-of-Sale (POS) devices to be integrated to Microsoft Windows family of operating systems. The devices are divided into categories called device classes such as PIN Pads, Cash Drawers etc.

OPOS model

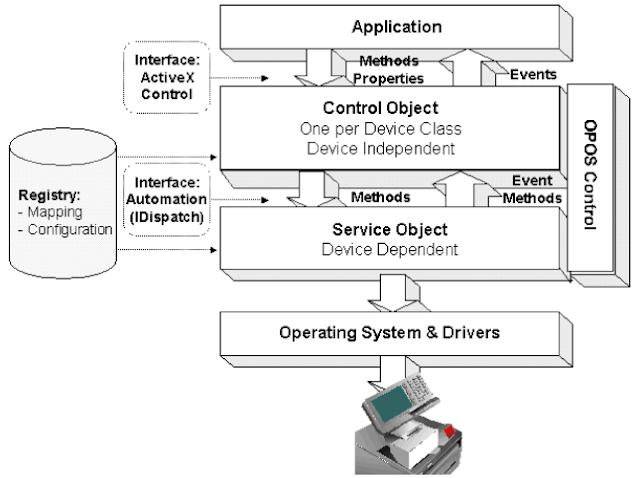

OPOS Control is software abstraction layer for a device class and it consists of a Control Object and a Service Object. A device is characterized by its Properties, Methods and Events. A POS application accesses a device through the OPOS Control APIs that expose these properties, methods and events. The layers are shown in the figure below taken from the UnifiedPOS specification document[1].

Control Object (CO)

- exposes a set of properties, methods and events

- is an ActiveX

- can be downloaded from the web

Service Object (SO)

- called by the CO

- implements OPOS functionality for a specific device class

- usually provided by the device manufacturer or third parties

How to use an OPOS Control?

The application usually calls the following Control Object methods:

- add event handlers to get event data

- call Open to open the device

- call ClaimDevice to gain exclusive access to the device

- set DeviceEnabled property to TRUE

- call any other methods to perform required operations

- call ReleaseDevice to release the device from exclusive access mode

- call Close to release the device and associated resources when the application is finished using the device

Relationship between CO and SO methods

The table below shows which Service Object method is called for the given Control Object method. For example, when the application calls CO's Open method, the CO calls the SO's OpenService method.

Category Control Object Service Object

method Open OpenService

Close CloseService

other method corresponding other method

property get String property GetPropertyString

set String property SetPropertyString

get Numeric property GetPropertyNumber

set Numeric property SetPropertyNumber

get/set other property type corresponding GetProperty/SetProperty

event SO calls a CO event request method:

SOData, SODirectIO, SOError,

SOOutputComplete and SOStatusUpdate

Control Object Open method

In order to write a Service Object, it's important to know how the Control Object's Open method works. If the Service Object does not satisfy certain requirements, the CO Open method will fail and the application won't be able to use the OPOS control. CO Open method as shown below takes a DeviceName as its argument.

long Open(BSTR DeviceName);

The CO Open method will

- query the registry to find the device class and device name

- load the service object for the device

- check if at least the methods defined in the initial OPOS version for the device class are supported by the SO

- call SO's OpenService method

- call SO's GetPropertyNumber(PIDX_ServiceObjectVersion) to get the service object version, then check if the major version number matches that of the CO's

- check if all methods that must be supported by the particular SO version are available

Service Object version

Three version levels are specified for SO version.

Major: The millions place

Minor: The thousands place

Build: The units place provided by the SO developer specifying a build number

For example, the version number 1002038 is interpreted as 1.2.38.

An SO will work with a CO as long as their major version numbers match.

Service Object for a simple virtual MSR

Now that we know the basics, we'll create a minimal Service Object for a virtual MSR (Magnetic Stripe Reader) supporting following functionality:

open, claim, enable, enable data events, get a data event notification, release and close.

The figure below shows a list of MSR CO methods and the OPOS versions they were first introduced.

First we should download the latest OPOS Control Objects and header files from here. Download the CCO Runtime zip file, then register the MSR CO. Then follow the steps given below to create the SO.

Steps

1. Launch Visual Studio and create an ATL Project. Name it MyVirtualMsr. Select DLL as the Application Type and check Allow merging proxy/stub code in the ATL Project Wizard.

|

| Create MyVirtualMsr ATL project |

|

| Selecting Application type and merging proxy/stub code |

|

| Setting ProgID |

|

| Options |

3. Add the following methods to the IMyVirtualMsrSO interface (Right click the IMyVirtualMsrSO interface in class view and select add method from the menu. Make sure you set the parameter attributes out and retval for pRC).

HRESULT OpenService(BSTR DeviceClass, BSTR DeviceName, IDispatch* pDispatch, [out, retval] long* pRC);

HRESULT CheckHealth(long Level, [out, retval] long* pRC);

HRESULT ClaimDevice(long ClaimTimeout, [out, retval] long* pRC);

HRESULT ClearInput([out, retval] long* pRC);

HRESULT CloseService([out, retval] long* pRC);

HRESULT COFreezeEvents(VARIANT_BOOL Freeze, [out, retval] long* pRC);

HRESULT DirectIO(long Command, [in, out] long* pData, [in, out] BSTR* pString, [out, retval] long* pRC);

HRESULT ReleaseDevice([out, retval] long* pRC);

HRESULT GetPropertyNumber(long PropIndex, [out, retval] long* pNumber);

HRESULT GetPropertyString(long PropIndex, [out, retval] BSTR* pString);

HRESULT SetPropertyNumber(long PropIndex, long Number);

HRESULT SetPropertyString(long PropIndex, BSTR PropString);

CComDispatchDriver m_pDriver;

5. Include OposMsr.hi from the Include directory of the CCO Runtime that you downloaded earlier. This includes the definitions of various OPOS constants such as ResultCodes and property IDs.

5. Include OposMsr.hi from the Include directory of the CCO Runtime that you downloaded earlier. This includes the definitions of various OPOS constants such as ResultCodes and property IDs.

#include "OposMsr.hi"

6. Modify the OpenService methos as shown below:

6. Modify the OpenService methos as shown below:

STDMETHODIMP CMyVirtualMsrSO::OpenService(BSTR DeviceClass, BSTR DeviceName, IDispatch* pDispatch, LONG* pRC)

{

m_pDriver = pDispatch;

*pRC = OPOS_SUCCESS;

return S_OK;

}

7. Set return code value pRC of the methods to OPOS_SUCCESS

*pRC = OPOS_SUCCESS;

8. Modify the GetPropertyNumber method as shown below to return a valid version number

8. Modify the GetPropertyNumber method as shown below to return a valid version number

STDMETHODIMP CMyVirtualMsrSO::GetPropertyNumber(LONG PropIndex, LONG* pNumber)

{

if (PIDX_ServiceObjectVersion == PropIndex)

{

*pNumber = 1000111;

}

return S_OK;

}

9. Create a registry key under OLEforRetail for our device. We'll call our virtual MSR 'MyVirtualDevice' and this is the name that we'll be passing to CO Open method. The default value of the device name key MyVirtualDevice must be the value we entered for the ProgID while we were creating the ATL object, which in our case is the value VIRTUAL.OPOS.MSR.so.

If you are on a 64bit machine:

[HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\OLEforRetail\ServiceOPOS\MSR\MyVirtualDevice]

@="VIRTUAL.OPOS.MSR.so"

If you are on a 32bit machine:

[HKEY_LOCAL_MACHINE\SOFTWARE\OLEforRetail\ServiceOPOS\MSR\MyVirtualDevice]

@="VIRTUAL.OPOS.MSR.so"

10. Now build the project. By default, it is set to register the dll after build. If you didn't launch Visual Studio with admin privileges, it'll give you an error. You can either launch Visual Studio with admin privileges, or disable registering by setting Register Output option in the Linker settings to No, then manually register the dll. To manually register,

if you are on a 64bit machine:

c:\windows\syswow64\regsvr32 MyVirtualMsr.dll

if you are on a 32bit machine:

c:\windows\system32\regsvr32 MyVirtualMsr.dll

Now we have satisfied all conditions for a minimal valid MSR Service Object by adding registry entries and methods, then returning a valid version number. This means Control Object Open(DeviceName) method will return success. Now, let's add some code to fire a simple data event to the Control Object.

11. Add a FireDataEvent method to CMyVirtualMsrSO.

void CMyVirtualMsrSO::FireDataEvent(void)

{

VARIANT v; v.vt = VT_I4; v.lVal = 10;

m_pDriver.Invoke1(L"SOData", &v);

}

Please note that the code below is just for demo purpose of data events. If you call CO Close method before this 500ms, it'll destroy the SO, and this in turn will crash the application because the pVirtualMsr is no longer a valid object.

DWORD WINAPI MyThreadFunction( LPVOID lpParam )

{

CMyVirtualMsrSO* pVirtualMsr = (CMyVirtualMsrSO*)lpParam;

Sleep(500);

if (NULL != pVirtualMsr)

{

pVirtualMsr->FireDataEvent();

}

return 0;

}

STDMETHODIMP CMyVirtualMsrSO::SetPropertyNumber(LONG PropIndex, LONG Number)

{

switch (PropIndex)

{

case PIDX_DeviceEnabled:

break;

case PIDX_DataEventEnabled:

CreateThread(

NULL, // default security attributes

0, // use default stack size

MyThreadFunction, // thread function name

this, // argument to thread function

0, // use default creation flags

NULL);

break;

default:

break;

}

return S_OK;

}

Rebulid the project and register the dll. Now we can test our SO easily with an MFC or a VB test application.

References

[1] UnifiedPOS 1.12 documentation (link)